COURS DE VERIFICATION LOGICIEL

MASTER II 2018-2019

Teacher ‘s name : Pr Atsa Etoundi Roger

Résumé

Ce document présente les différents travaux éffectués par les étudiants de

MASTER 2 promotion 2017-2018 pour le compte du cours de vérification

logiciel. Ce document est divisé en cinq parties à savoir :

- statistical and function approaches to testing,

- test data analysis, testability,

- static analysis techniques,

- dynamic analysis techniques,

- selected state-of-the-art results, and world application

statistical and function approaches to testing

Introduction

Software testing is the process of analyzing software to find the difference

between required and existing condition. Software testing is performed

throughout the development cycle of software and it is also performed to build

quality software, for this purpose two basic testing approaches are used, they

are white box testing and black box testing. One of the software testing

technique is Black Box Testing, and we’ll show the statistical testing.

I. Black box testing

Black box testing is an integral part of correctness testing but its ideas are

not limited to correctness testing only.

The tester, in black box testing only knows about the input (process by a

system) and required output, or in the other word tester need not know the

internal working of the system.

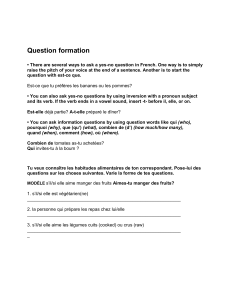

II. DIFFERENT FORMS OF BLACK BOX TESTING

TECHNIQUE

The differents forms of black box testing technique are (see figure) :

For each forms we can respond at all the question : what ?,why ? ,how ?

1. Equivalance Partitioning

What ?

Equivalence partitioning is a black box testing method that divides the

input data of a software unit into partitions of data from which test cases

can be derived

In equivalence class partitioning an equivalence class is formed of the

inputs for which the behavior of the system is specified or expected to be

similar

An equivalence class represents a set of valid or invalid states for input

conditions. See the figure

Why ?

The issue is to select the test cases suitably. The partitioning an

equivalence class is formed of the inputs for which the behavior of the system

is specified or expected to be similar.

How ?

Some of the guidelines for equivalence partitioning are given

below :

1) One valid and two invalid equivalence classes are defined if an input

condition specifies a range.

2) One valid and two invalid equivalence classes are defined if an input

condition requires a specific value.

3) One valid and one invalid equivalence class are defined if an input

condition specifies a no. of a set.

4) One valid and one invalid equivalence class are defined if an input

condition is Boolean

6

6

7

7

8

8

9

9

10

10

11

11

12

12

13

13

14

14

15

15

16

16

17

17

18

18

19

19

20

20

21

21

22

22

23

23

24

24

25

25

26

26

27

27

28

28

29

29

30

30

31

31

32

32

33

33

34

34

35

35

36

36

37

37

38

38

39

39

40

40

41

41

42

42

43

43

44

44

45

45

46

46

47

47

48

48

49

49

50

50

51

51

52

52

53

53

54

54

55

55

1

/

55

100%

![The Enzymes, Chemistry and Mechanism o] Action, edited by](http://s1.studylibfr.com/store/data/004040712_1-47306fdc4a3811eb8dd0f228af791e56-300x300.png)