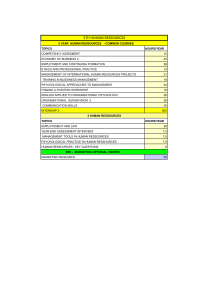

SLIDE 2

The topic of my internship was Scheduling in high performance computing. It is the process of arranging, controlling and

optimizing work and ressources for a set of jobs to compute.

It is an important tool for engineering, where it can have a major impact on the efficiency of the processing to minimize the

production time and costs. The most common definition of scheduling problem only relies on the number of processors at our

disposal and I will present it in the two first parts. Because of the ever-growing amount of data produced by modern scientific

applications, it make sense to also consider other ressources, especially related to memory. To better take that into account, we

worked on an extended version of the problem with different ressouce types that I will present in section 3. Finally I also worked

on another problem that manages the possible crashes or failures in processing and will present that in section 4 and 5.

SLIDE 4

- Processes can be divided in n sub-jobs

- Some may need to be processed before others. We represent that by precedence constraints between two jobs. Can be seen as a

DAG where edges=precedence constraints and vertices=jobs

- We have P processors, we can allocated as many as we want for each jobs. Allocated more processors will reduce the time. We

suppose perfect knowledge of the problem : we know exactly how long a job will take for all possible processor allocation

- Of course, we can’t use more than P processors.

We wish to find a way to allocate processors and to find in which order we should process the jobs, to reduce the total time of

processing.

SLIDE 5

- In this slide we have an example of an input

- The double entry table is the time function (exemple)

- The graphs represent precedence constraints

SLIDE 6

- To solve this problem, we use different variables that are easy to compute : work, area and critical path

- The work can be seen as the total number of operation we need to make. It is the time multiplied by the number of processors.

Increasing, otherwise perfect speed up. The figure is the vizualized representation of the work (+example)

- The area is the total work normalized by the number of processors. We can view area related to a job as the area of the rectangle,

x axis time, y axis proportion of processor used. Total area is the time required if all processors work all the time, thus it is a lower

bound

- The critical path is time required to process the longest path, if we put highest priority on it. It is also very easy to compute (we

just need to compute the shortest time of completion for all tasks). Lower bound

SLIDE 7

In the next section, we will present a classic scheduling algorithm

SLIDE 8

- CP and A are lower bound, it make sense to try to minimize the max of them over all posible allocation. Here it is 10 and given by

the red allocation.

- As Work increase, reducing CP will increase the Area, that’s why we call it a tradeoff

- NP-complete, 2 approx

SLIDE 9

- We chose this allocation and process jobs as soon as possible.

- Priority rule between jobs (2-3 tie) for example highest processor allocation first. + dérouler algo

SLIDE 10

Now I will present the problem I mainly worked on in my internship, that consider multiple ressource types.

SLIDE 11

- As I explained, the number of processors to allocate is not the only impacting ressource for the time of process.

- We suppose we have d ressources type, correspond to the problem in multiple dimensions.

- Instead of chosing a certain amount of processors, we chose a certain amount of ressource for each type, without surpassing the

resource capacity Pi

- For the area, we extend the previous definition to each ressource and normalize it by d. Once again it Is the time required if all

ressources are always used.

SLIDE 12

- Number of ressource can differ for each type

- The time may depends of all ressources type

- For realisation, the total number of ressource can exceed max capacity

- Of cours the rectange representing jobs have same length and same start date for all ressources

SLIDE 13

Time decrease

Time can’t increase to much (questionnable, but we basically need that to be true when doable).

The previous asumption of increasing area doesn’t hold anymore

But the area of the ressource reduced the most increase (red)

SLIDE 14

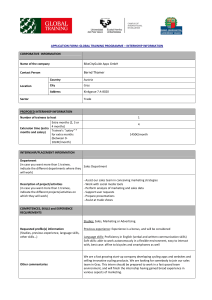

The following table represents the previous results compared to my result

- general problem : actually there is to my knowledge no previous work. But strategy in Sun, 2018 can be applied and we get

5.44d, so we needed to beat that. Approximation ratio always between d and … towards infinity equivalent to d

- Independant subcase, beated previous results

The two other problems are a bit simpler, we are always after d. Internship adviser thinks the third problem offline we can’t beat d,

so my results are quite tight

- Even if we optimize perfectly allocation or scheduling, we still at d

Would need breakthrough idea.

SLIDE 15

Now I will present the second variant I’ve worked on, taking into account the fact that jobs may fail

SLIDE 16

blabla

+ Two algorithm, one does smart local allocation that doesn’t depend on failures + classic scheduling

- Constant approx for many classic speedup models, but can be up to a sqrt(P) factor of error

1

/

2

100%