WDM-PON Scheduling Algorithms: Design & Performance Analysis

Telechargé par

KHAOULA LATRECHE

JOURNAL OF LIGHTWAVE TECHNOLOGY, VOL. 23, NO. X, MONTH 2005 1

Design and Performance Analysis of Scheduling

Algorithms for WDM-PON under

SUCCESS-HPON Architecture

Kyeong Soo Kim, Member, IEEE, David Gutierrez, Student Member, IEEE, Fu-Tai An, Member, IEEE,

and Leonid G. Kazovsky, Fellow, IEEE, Fellow, OSA

Abstract—We report the results of our design and performance

analysis of two new algorithms for efficient and fair schedul-

ing of variable-length frames in a wavelength division multi-

plexing (WDM)-passive optical network (PON) under Stanford

University aCCESS-Hybrid PON (SUCCESS-HPON) architec-

ture. The WDM-PON under the SUCCESS-HPON architecture

has unique features that have direct impacts on the design of

scheduling algorithms: First, an optical line terminal (OLT) uses

tunable transmitters and receivers that are shared by all the

optical network units (ONUs) served by the OLT to reduce the

number of expensive dense WDM (DWDM) transceivers. Second,

also for cost reduction, ONUs have no local DWDM light sources

but use optical modulators to modulate optical continuous wave

(CW) bursts provided by the OLT for upstream transmissions.

Therefore, the tunable transmitters at the OLT are used for

both upstream and downstream transmissions. To provide ef-

ficient bidirectional communications between the OLT and the

ONUs and guarantee fairness between upstream and downstream

traffic, we have designed two scheduling algorithms – batching

earliest departure first (BEDF) and sequential scheduling with

schedule-time framing (S3F). The BEDF is based on the batch

scheduling mode where frames arriving at the OLT during a

batch period are stored in virtual output queues (VOQs) and

scheduled at the end of the batch period. It improves transmission

efficiency by selecting the frame with the earliest departure

time from a batch of multiple frames, which optimizes the

usage of tunable transmitters in scheduling. Considering the high

complexity of the optimization process in BEDF, we have also de-

signed the S3F based on the sequential scheduling mode as in the

original sequential scheduling algorithm proposed earlier. In S3F

we use VOQs to provide memory space protection among traffic

flows and a granting scheme together with schedule-time framing

for both upstream and downstream traffic to reduce framing

and guard band overhead. Through extensive simulations under

various configurations of the tunable transmitters and receivers,

we have demonstrated that both the BEDF and S3F substantially

improve the throughput and delay performances over the orig-

inal sequential scheduling algorithm, while guaranteeing better

fairness between upstream and downstream traffic.

Index Terms—Access, media access control (MAC) protocols,

passive optical network (PON), scheduling, wavelength division

multiplexing (WDM)

This work was supported in part by the Stanford Networking Research

Center and STMicroelectronics.

K. S. Kim is with the Advanced System Technology, STMicroelectronics,

Stanford, CA 94305, USA (e-mail: [email protected]).

D. Gutierrez and L. G. Kazovsky are with the Photonics and Networking

Research Laboratory, Stanford University, Stanford, CA 94305, USA (e-mail:

{degm,kazovsky}@stanford.edu).

F-T. An is with the Marvell Technology Group Ltd. (e-

mail:ftan@stanfordalumni.org).

This paper was presented in part at GLOBECOM 2004, Dallas, TX,

November, 2004.

I. INTRODUCTION

Efficient and fair scheduling of variable-length messages

under the constraints of shared resources is critical for the suc-

cess of advanced, next-generation wavelength-routed optical

networks where tunable transmitters and receivers are shared

by many users in order to reduce the high cost of wavelength

division multiplexing (WDM) optical components.

The scheduling problem we study in this paper is for

a WDM-passive optical network (PON) under Stanford

University aCCESS-Hybrid PON (SUCCESS-HPON) archi-

tecture, which was proposed for next-generation hybrid

WDM/time division multiplexing (TDM) optical access net-

works [1].1The SUCCESS-HPON architecture is based on a

topology consisting of a collector ring and several distribution

stars connecting a central office (CO) and optical networking

units (ONUs). By clever use of coarse WDM (CWDM) and

dense WDM (DWDM) technologies, it guarantees the coex-

istence of current-generation TDM-PON and next-generation

WDM-PON systems on the same network. The semi-passive

configuration of remote nodes (RNs) together with the hybrid

topology also enables supporting both business and residential

users on the same access infrastructure by providing protection

and restoration capability, a frequently missing feature in

traditional PON systems.

In designing the SUCCESS-HPON architecture, we mainly

focused on providing economical migration paths from the

current-generation TDM-PONs to future WDM-based optical

access networks. This has been achieved by sharing some

high-performance but costly components and resources in

SUCCESS WDM-PON2: First, an optical line terminal (OLT)

uses tunable transmitters and receivers that are shared by

all the optical network units (ONUs) served by the OLT to

reduce the number of expensive DWDM transceivers. Second,

also for cost reduction, ONUs have no local DWDM light

sources but use optical modulators to modulate optical con-

tinuous wave (CW) bursts provided by the OLT for upstream

transmissions. Therefore, the tunable transmitters at the OLT

are used for both upstream and downstream transmissions.

The sharing of tunable transmitters and receivers at the OLT

and the use of tunable transmitters for both upstream and

1We have changed the name of the architecture from SUCCESS to

SUCCESS-HPON to distinguish it from other architectures under the same

research initiative of SUCCESS at PNRL, Stanford.

2In this paper we use the term SUCCESS WDM-PON to denote the WDM-

PON under the SUCCESS-HPON architecture.

2 JOURNAL OF LIGHTWAVE TECHNOLOGY, VOL. 23, NO. X, MONTH 2005

downstream transmissions, however, pose a great challenge in

designing scheduling algorithms: A scheduling algorithm for

the SUCCESS WDM-PON has to keep track of the status of all

shared resources (i.e., tunable transmitters, tunable receivers

and wavelengths assigned to ONUs) and arrange them properly

in both time and wavelength domains to avoid any conflicts

among them for both upstream and downstream transmissions.

While many researchers have studied the issue of scheduling

messages in both time and wavelength domains in network

architectures based on tunable transmitters and/or receivers

(e.g., [2]–[5]), only a few schemes have been proposed to sup-

port variable-length message transmissions without segmenta-

tion and reassembly processes. In [4], we studied scheduling

algorithms for unslotted carrier sense multiple access with

collision avoidance (CSMA/CA) with backoff media access

control (MAC) protocol to address the issues of fairness and

bandwidth efficiency in multiple-access WDM ring networks.

In [5], the authors studied distributed algorithms for scheduling

variable-length messages in a single-hop multichannel local

lightwave network with a focus on reducing tuning overhead.

To the best of our knowledge, however, scheduling algorithms

for a network where tunable transmitters are used for both

upstream and downstream transmissions as in the SUCCESS

WDM-PON, have not been investigated by other researchers.

In [1] we proposed a sequential scheduling algorithm for

the SUCCESS WDM-PON, which emulates a virtual global

first-in-first-out (FIFO) queueing for all incoming frames. In

this algorithm incoming frames are scheduled sequentially

in the order of arrival at the OLT. This original sequential

scheduling algorithm is simple to implement, but suffers from

poor transmission efficiency and fairness guarantee between

upstream and downstream traffic.

To address the limitations of the original sequential schedul-

ing algorithm, we propose in this paper two new scheduling

algorithms – batching earliest departure first (BEDF) and

sequential scheduling with schedule-time framing (S3F). The

key idea in the design of BEDF is to provide room for

optimization and priority queueing by scheduling over more

than one frame: In BEDF, frames arriving at the OLT during

a batch period are stored in virtual output queues (VOQs)

and scheduled at the end of the batch period, which allows

in scheduling to select the best frame according to a given

optimal scheduling policy from the batch of multiple frames

in the VOQs. We choose the EDF as an optimal scheduling

policy to minimize the unused time of the tunable transmitters.

The throughput versus scheduling delay tradeoff is a major

design issue in BEDF.

In S3F, considering the high complexity of the BEDF

optimization process, we adopt the sequential scheduling mode

as in the original sequential scheduling algorithm, but use

VOQs to provide memory space protection among traffic flows

as in BEDF and a granting scheme together with schedule-time

framing for both upstream and downstream traffic to reduce

overhead due to framing and guard bands.

The rest of the paper is organized as follows. In Section

II we provide a high-level overview of the SUCCESS-HPON

architecture and review the MAC protocol, frame formats and

original sequential scheduling algorithm for the WDM-PON

under the SUCCESS-HPON architecture. In Section III we

describe the BEDF and S3F scheduling algorithms based on

the system model and procedures used in the description of

the original sequential scheduling algorithm in Section II. In

Section IV, we provide the results of the performance analysis

of the designed scheduling algorithms through simulations.

Section V summarizes our work in this paper and discusses

future directions for further studies.

II. WDM-PON UNDER SUCCESS-HPON

ARCHITECTURE

A. Overall Architecture

A high-level overview of the SUCCESS-HPON, including

TDM-PONs and WDM-PONs as its subsystems with wave-

length allocations, is shown in Fig. 1. A single-fiber collector

ring with stars attached to it formulates the basic topology.

The collector ring strings up RNs, which are the centers of

the stars. The ONUs attached to the RN on the west side of

the ring talk and listen to the transceivers on the west side of

the OLT, and likewise for the ONUs attached to the RN on

the east side of the ring. Logically there is a point-to-point

connection between each RN and the OLT. No wavelength is

reused on the collector ring. When there is a fiber cut, the

affected RNs will switch to the transceivers on the other side

of the OLT for continuous operations as soon as they sense a

signal loss.

The RN for TDM-PON has a pair of CWDM band splitters

to add and drop wavelengths for upstream and downstream

transmissions, respectively. On the other hand, the RN for

WDM-PON has one CWDM band splitter, adding and drop-

ping a group of DWDM wavelengths within a CWDM grid,

and a DWDM MUX/DEMUX device, i.e., arrayed waveguide

grating (AWG), per PON. Each ONU has its own dedicated

wavelength for both upstream and downstream transmissions

on a DWDM grid to communicate with the OLT. Since the

insertion loss of a typical AWG is roughly 6 dB regardless of

the number of ports, AWGs with more than eight ports will

likely be employed to enjoy better power budget compared to

passive splitters.

Fig. 2 shows block diagrams of the portion of the OLT and

the ONU for the SUCCESS WDM-PON. Tunable components,

such as fast tunable lasers and tunable filters are employed for

DWDM channels. Because the average load of the network is

usually lower than the peak load [6], we can expect statistical

multiplexing gain by sharing tunable components at the OLT,

which also reduces the total system cost by minimizing the

transceiver count for a given number of ONUs and user

demand on bandwidth. Downstream optical signals from the

tunable transmitters in DWDM channels enter both ends of

the ring through passive splitters and circulators. Upstream

optical signals from the ring pass the same devices but in

reverse order and are separated from the downstream signals

by the circulators. The scheduler controls the operation of

both tunable transmitters and tunable receivers based on the

scheduling algorithms that will be described in Section III.

Note that the tunable transmitters at the OLT are used

for both downstream frames and CW optical bursts to be

KIM et al.: SCHEDULING ALGORITHMS FOR WDM-PON UNDER SUCCESS-HPON 3

RN

RN

RN

RN

λ

*1,

λ

2

λ

1

λ

2

λ

21

λ

22

λ

23

λ

*1

λ

*3,

λ

4, …

λ

1,

λ

2

λ

3,

λ

4, …

λ

4

λ

*3

λ

3

λ

41

λ

42

λ

43

TDM-PON ONU

RN TDM-PON RN

WDM-PON ONU

RN WDM-PON RN

Central

Office

Fig. 1. Overview of SUCCESS-HPON.

Scheduler

Downstream

Traffic Queues

Tunable

Transmitter 1

. . .

. . .

Tunable

Transmitter M

Tunable

Receiver 1

. . .

Tunable

Receiver N

Upstream

Grant Queues Circulator

M:2 Passive Splitter

. . .

Upstream Traffic

To the Network N:2 Passive Splitter

(a)

Burst-Mode

Receiver

Modulator

MAC

Downstream

Traffic

Upstream Traffic

Queue

Circulator

1:2 Passive Splitter

(b)

Fig. 2. Block diagrams of (a) the portion of OLT and (b) the ONU for

SUCCESS WDM-PON.

modulated by the ONUs for their upstream frames. With this

configuration only half-duplex communications are possible at

the physical layer between the OLT and each ONU using a

variation of the time compression multiplexing (TCM) scheme

[7]. Compared to a similar architecture with a two-fiber ring,

two sets of light sources and two sets of MUX/DEMUX

for full-duplex communications [8], our design significantly

lowers deployment cost. As a tradeoff, however, we need a

careful design of a scheduling algorithm to provide efficient

bidirectional communications at the MAC layer.

As discussed before, the ONU has no local light source

and uses an optical modulator to modulate optical CW bursts

received from the OLT for its upstream transmission. A semi-

conductor optical amplifier (SOA) can be used as a modulator

for this purpose [9]. The ONU MAC protocol block not

only controls the switching between upstream and downstream

transmissions but also coordinates with the scheduler at the

OLT through a polling mechanism.

For implementation details, especially at the physical layer

8-Bit

Flags

Ethernet Frame

Grant (CW)

or

8-Bit

Preamble 16-Bit

Delimiter

Overhead

16-Bit

Report Ethernet Frame …

For

Downstream

For

Upstream

8-Bit

Preamble 16-Bit

Delimiter

Overhead

16-Bit

Grant

8-Bit

Flags

8-Bit

Preamble 16-Bit

Delimiter

Overhead

Ethernet FrameEthernet Frame …

Order of Transmission

Fig. 3. Frames formats for SUCCESS WDM-PON MAC protocol.

of the SUCCESS WDM-PON, readers are referred to [1].

B. MAC Protocol and Frame Formats for SUCCESS WDM-

PON

Like APON and EPON systems [10], the SUCCESS WDM-

PON OLT polls to check the amount of upstream traffic stored

at the ONUs and sends grants – but in the form of optical CW

bursts in this case – to allow the ONUs to transmit upstream

traffic. Since there is neither a separate control channel nor

a control message embedding scheme using escape sequences

as in [11], the MAC protocol has to rely on in-band signaling

and uses the frame formats shown in Fig. 3, where the report

and grant fields are defined for the polling process.3Note that

the 1-bit ‘ID’ field in [1] for downstream frames has been

extended to 8-bit flags for future extensions: Now the ‘Frame

Type’ field of the flag is used to indicate whether this frame is

for normal data traffic or not. Usage of the fields in the 8-bit

flags is summarized in Table I.

Each ONU reports the amount of traffic waiting in its

upstream traffic queue in octets through the report field in an

upstream frame when the ‘Force Report’ field of a received

downstream frame is set4, and the OLT uses the grant field

3In this paper, we assume that Ethernet frames are carried in the payload

part of SUCCESS WDM-PON frames. Note that, however, any other protocol

frame or packet. e.g., IP packets, can be encapsulated and carried in a

SUCCESS WDM-PON frame because it does not depend on any specific

layer 2 or 3 protocols unlike APON or EPON.

4In this paper, we assume this field is always set.

4 JOURNAL OF LIGHTWAVE TECHNOLOGY, VOL. 23, NO. X, MONTH 2005

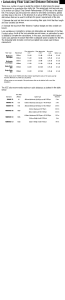

TABLE I

8-BIT FLAGS IN DOWNSTREAM SUCCESS WDM-PON FRAME

Bit Field Values

0 - Normal Data

0-3 Frame Type 1 - Grant

2-15 - Unused

0 - No action required

4 Force Report 1 - ONU should report in the corresponding

upstream frame

5 Unused -

6 Unused -

7 Unused -

to indicate the actual size of each grant (also in octets). Note

that, as shown in Fig. 3, the length of the whole CW burst

corresponds to that of all upstream Ethernet frames (i.e., the

size of grant) plus the report field and the overhead.

We use two control parameters to govern the polling process

consisting of reporting and granting operations as follows:

•ON U T IM EOUT : The OLT maintains one timer per

ONU and resets it whenever a grant frame is sent down-

stream to an ONU. It clears the timer when the corre-

sponding upstream frame with a nonzero report field is re-

ceived. If the timer expires after the ON U T IM EOUT

period, which means either there was no upstream traffic

when the ONU received a grant frame or the report

message was lost during the transmission to the OLT,

the OLT sends a new grant to poll that ONU again and

resets the timer. This parameter keeps the polling process

going on even in the case of the loss of polling messages

and bounds the maximum polling cycle. It also affects the

average packet delay of upstream traffic when the system

is under light load.

•MAX GRANT : This parameter limits the maximum

size of a grant (i.e., the payload part of the CW burst)

for ONU upstream traffic.

C. Original Sequential Scheduling Algorithm

Here we describe how the scheduling of transmission and/or

reception of a SUCCESS WDM-PON frame is done under

the original sequential scheduling algorithm proposed in [1].

This will be the basic building block of the new scheduling

algorithms in Section III. For this purpose, we consider

a SUCCESS WDM-PON system with WONUs (therefore

Wwavelengths), Mtunable transmitters, and Ntunable

receivers. Because the tunable transmitters are used for both

upstream and downstream traffic but tunable receivers are only

for upstream traffic, we usually need more transmitters than

receivers, i.e.,W≥M≥N. We include in the algorithm

description the guard band of Gns between consecutive

SUCCESS WDM-PON frames that accounts for the effects

of unstable local ONU clock frequencies and tuning time of

tunable transmitters and receivers at the OLT.

We define the following arrays of global status variables

used in the algorithm description:

•CAT: Array of Channel Available Times. CAT[i]=t,

where i= 1,2, ..., W , means that the wavelength λiwill

be available for transmission after time t.

•TAT: Array of Transmitter Available Times. TAT[i]=t,

where i= 1,2, ..., M , means that the ith tunable trans-

mitter will be available for transmission after time t.

•RAT: Array of Receiver Available Times. RAT[i]=t,

where i= 1,2, ..., N , means that the ith tunable receiver

will be available for reception after time t.

•RTT: Array of round trip times (RTTs) between the OLT

and the ONUs. RTT[i] denotes the RTT between the OLT

and the ith ONU.

When scheduling each SUCCESS WDM-PON frame, we

first select the earliest available transmitter and receiver. As-

suming that the ith transmitter and the jth receiver are the

earliest available transmitter and receiver respectively, we can

obtain the transmission time tof a SUCCESS WDM-PON

frame destined for the kth ONU as follows:

t=

max(RAT [j] + G−RT T [k]−GOH ,

T AT [i] + G, CAT [k] + G)

if the frame is a grant for upstream traffic,

max(T AT [i] + G, CAT [k] + G)

if the frame is for downstream traffic, (1)

where GOH is a transmission delay for the grant overhead

consisting of the overhead, 8-bit flags and grant fields of the

SUCCESS WDM-PON grant frame at a line rate Rbit/s. If

the frame is a grant frame for upstream traffic, the reception

of the corresponding upstream frame from the ONU should

be scheduled at t+GOH +RT T [k].

After scheduling the frame transmission and/or reception,

the related status variables should be updated as follows:

T AT [i] = t+l/R

CAT [k] = t+l/R ,(2)

and if the frame is a grant frame for upstream traffic,

RAT [j] = t+l/R +RT T [k],(3)

where lis the length of the whole frame in bits.

Fig. 4 illustrates the timing relations among tunable trans-

mitters and receivers, and frames over channels through an

example: At t1, a report for upstream traffic from ONU4

arrives at the OLT. First, the scheduler at the OLT checks

the transmitter availability and finds that TX3is available

now. Then, it checks the receiver availability and finds that

RX1will be available at t0+GOH +RT T1+lcw1. Then,

it also checks the channel availability and finds that λ4is

available now. Finally, based on all these information, the

scheduler schedules the transmission of a grant frame at

t0+RT T1+lcw1+G−RT T4through TX3on λ4and the

reception of a corresponding upstream frame from ONU4at

t0+GOH +RT T1+lcw1+G. Pseudocode for the whole

procedure is given in Fig. 5.

III. DESIGN OF NEW BATCH AND SEQUENTIAL

SCHEDULING ALGORITHMS FOR SUCCESS WDM-PON

In this section we describe two new scheduling algorithms

– the BEDF and the S3F – designed in order to improve the

following performance measures over the original sequential

scheduling algorithm: 1) Fairness guarantee between upstream

KIM et al.: SCHEDULING ALGORITHMS FOR WDM-PON UNDER SUCCESS-HPON 5

RX1

RX2

TX1

TX2

TX3t1

RTT4

λ

1

λ

2

λ

4

G

λ

4

λ

1

New transmission

scheduled!

lcw1

RTT1

λ

2

λ

1

Time

Grant Overhead

(= OH + Flags + Grant)

CW1

CW2

CW3

lcw3

lcw2

t0

Fig. 4. An example of the original sequential scheduling at t1for a system

with W= 4,M= 3 and N= 2.

begin

k←− destination(frame);

l←− length(frame);

tnow ←− current time;

for i= 1 to Wdo CAT [i]←− max(tnow , CAT [i]);

for i= 1 to Mdo T AT [i]←− max(tnow , T AT [i]);

for i= 1 to Ndo RAT [i]←− max(tnow , RAT [i]);

select is.t. T AT [i]≤T AT [m]∀m= 1,...,M;

if the frame is a grant for upstream traffic then

select js.t. RAT [j]≤RAT [n]∀n= 1,...,N;

t←− max(RAT [j] + G−RT T [k]−

GOH , T AT [i] + G, CAT [k] + G);

schedule reception at time t+GOH +RT T [k]with

the jth receiver via the wavelength λk;

RAT [j]←− t+l/R +RT T [k];

elset←− max(T AT [i] + G, CAT [k] + G);

end

T AT [i]←− t+l/R;

CAT [k]←− t+l/R;

schedule transmission at time twith the ith transmitter via

the wavelength λk;

end

Fig. 5: Pseudocode for the original sequential scheduling

algorithm.

and downstream traffic flows for a given ONU and 2) overall

throughput. Here we use a simple but intuitive definition of

’fairness’: On the assumption that all received traffic flows

are legitimate, the scheduler assigns bandwidth so that the

resulting throughput of a traffic flow should be in propor-

tion to its incoming rate. By ‘traffic flow’ we mean the

aggregated traffic between the OLT and each ONU in each

direction (upstream or downstream); thus, the scheduler at

the OLT deals with a total of 2Wseparate traffic flows. In

the original sequential scheduling algorithm, a downstream

Ethernet frame is encapsulated in a SUCCESS WDM-PON

frame immediately after its arrival and put into a global

FIFO queue that is shared by all upstream and downstream

traffic. As the simulation results in [1] show, the lack of

protection for memory space among traffic flows leads into

poor fairness between upstream and downstream traffic. Also,

because there is no room for optimization in scheduling, the

maximum achievable throughput is much lower than the total

transmission capacity. To address the issue of memory space

protection among traffic flows, we base both the scheduling

algorithms on VOQing with one VOQ per traffic flow either

upstream or downstream for an ONU.

A. Batching Earliest Departure First (BEDF) Scheduling

The idea of batch scheduling, where a batch of arrived

messages during a certain period forms a task set to which

a scheduling algorithm is applied, has been already studied

in [12], but in a slightly different context where the main

concern is the reduction of the frequency and complexity of

the scheduling algorithm at the cost of deferring consideration

of new tasks. On the other hand, the major concern in our

design of the BEDF is to provide room for optimization in

scheduling by forming a task set consisting of multiple frames

by batching process. Rather than sequentially scheduling each

frame in the order of arrival, by forming a batch of arrived

frames and searching for a frame with an optimal value

according to a given scheduling policy, we can optimize

the scheduling performance. Because transmission efficiency

under the constraint of sharing limited resources is one of

the major design goals, we select the EDF as an optimal

scheduling policy to minimize the time when transmitters and

channels are wasted.

Building upon the basic sequential scheduling algorithm de-

scription in Section II, we can describe the BEDF scheduling

algorithm as follows: At the end of each batch period,

Step 1 Choose the earliest available transmitter and receiver

(i.e., whose TAT and RAT are minimum).

Step 2 Given the earliest available transmitter and receiver,

calculate a possible transmission time using Eq. 1 for

the first unscheduled frame in each VOQ that is not

marked as ‘Unschedulable’.

Step 3 Select the frame with the minimum transmission time

(i.e., the earliest departure time) and if the transmission

time is within the boundary of the next batch period,

schedule its transmission; otherwise, cancel its schedul-

ing and mark the corresponding VOQ as ‘Unschedu-

lable’. If the scheduled frame is a grant for upstream

traffic, schedule the reception of the corresponding up-

stream frame from the ONU after GOH +RT T from

its transmission time.

Step 4 Update the status variables using Eq. 2 for the transmit-

ter, the channel and if needed, the receiver.

Step 5 Repeat the whole procedures from the steps 1 through

4 until there is no unscheduled frame or all VOQs are

marked as ‘Unschedulable’.

Note that in contrast to the batch scheduling scheme

proposed in [12], once the scheduled transmission time ex-

ceeds the boundary of the next batch period, we cancel

the scheduling of that frame, mark the corresponding VOQ

as ‘Unschedulable’, and exclude the frames in that VOQ

in further scheduling during the current batch period. This

prevents the frames arriving in the current batch period from

consuming the resources available in the next batch period

and therefore provides some protection for network resources

between batches of frames. The interleaving of scheduling and

6

6

7

7

8

8

9

9

10

10

11

11

12

12

13

13

14

14

15

15

1

/

15

100%

![Operating System Concepts, 8th Edition[A4]](http://s1.studylibfr.com/store/data/010059796_1-0c1bb1698b3b92038c6a3b124eba87c0-300x300.png)