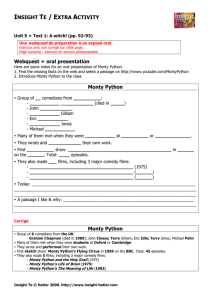

Accelerating Random Forests in Scikit-Learn Gilles Louppe August 29, 2014 Universit´

Accelerating Random Forests in Scikit-Learn

Gilles Louppe

Universit´e de Li`ege, Belgium

August 29, 2014

1 / 26

Motivation

... and many more applications !

2 / 26

Machine Learning 101

•Data comes as...

A set of samples L={(xi,yi)|i= 0,...,N−1}, with

Feature vector x ∈Rp(= input), and

Response y∈R(regression) or y∈ {0,1}(classification) (=

output)

•Goal is to...

Find a function ˆy=ϕ(x)

Such that error L(y,ˆy) on new (unseen) xis minimal

5 / 26

6

6

7

7

8

8

9

9

10

10

11

11

12

12

13

13

14

14

15

15

16

16

17

17

18

18

19

19

20

20

21

21

22

22

23

23

24

24

25

25

26

26

27

27

28

28

29

29

30

30

31

31

1

/

31

100%

![[arxiv.org]](http://s1.studylibfr.com/store/data/009162834_1-4be7f5511ba1826a40dc7d0928be8c61-300x300.png)