Boolean & Vector Space Models in Information Retrieval

Telechargé par

Rihab BEN LAMINE

Boolean Model

Hongning Wang

CS@UVa

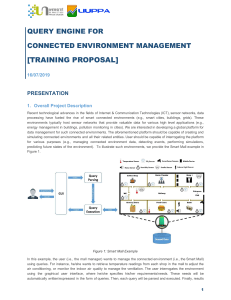

Abstraction of search engine architecture

User

Ranker

Indexer

Doc Analyzer

Index results

Crawler

Doc Representation Query Rep

(Query)

Evaluation

Feedback

CS@UVa CS 4501: Information Retrieval 2

Indexed corpus

Ranking procedure

Search with Boolean query

•Boolean query

–E.g., “obama” AND “healthcare” NOT “news”

•Procedures

–Lookup query term in the dictionary

–Retrieve the posting lists

–Operation

•AND: intersect the posting lists

•OR: union the posting list

•NOT: diff the posting list

CS@UVa CS 4501: Information Retrieval 3

Search with Boolean query

•Example: AND operation

128

34

2 4 8 16 32 64

1 2 3 5 8 13 21

Term1

Term2

scan the postings

Time complexity:

Trick for speed-up: when performing multi-way

join, starts from lowest frequency term to highest

frequency ones

CS@UVa CS 4501: Information Retrieval 4

Deficiency of Boolean model

•The query is unlikely precise

–“Over-constrained” query (terms are too specific): no

relevant documents can be found

–“Under-constrained” query (terms are too general):

over delivery

–It is hard to find the right position between these two

extremes (hard for users to specify constraints)

•Even if it is accurate

–Not all users would like to use such queries

–All relevant documents are not equally important

•No one would go through all the matched results

•Relevance is a matter of degree!

CS@UVa CS 4501: Information Retrieval 5

6

6

7

7

8

8

9

9

10

10

11

11

12

12

13

13

14

14

15

15

16

16

17

17

18

18

19

19

20

20

21

21

22

22

23

23

24

24

25

25

26

26

27

27

28

28

29

29

30

30

31

31

32

32

33

33

34

34

35

35

36

36

37

37

38

38

39

39

40

40

41

41

1

/

41

100%

![[ciir-publications.cs.umass.edu]](http://s1.studylibfr.com/store/data/009557090_1-fe10e8e9594ee37ae769fe35a2448716-300x300.png)