CSC2515 Fall 2020 Midterm Test

Start your scan at this page.

1. True/False [16 points] For each statement below, say whether it is true or false, and

give a one or two sentence justification of your answer.

(a) [2 points] As we increase the number of parameters in a model, both the bias

and variance of our predictions should decrease since we can better fit the data.

(b) [2 points] We can always improve the performance of clustering algorithms like

k-means by removing features and reducing the dimensionality of our data.

(c) [2 points] Bagging and boosting are both ways of reducing the variance of our

models.

(d) [2 points] Machine learning models work automatically without the need for the

user to manually set hyperparameters.

1

!"#$% &

'#()*+,) -*.% /"."-%(%.$ )%#/ 0"/(+.% ()% $(.+0(+.% *1 2"(" "32 .%2+0%$ 45"$ 6

"$ 7% "22 -*.% /"."-%(%.$ 6()% *8%."## -*2%# 5$ -*.% 0*-/#%9 6

"32 7% %9/%0( 0*-/#%9 -*2%#$ *8%. 15( 67)50) 75## 530.%"$% ()% 8".5"30%

!"#$% &

:3#%$$ ()% 2"(" ".% 53(.53$50"##; #*7 <25-%3$5*3"# &.%-*853, 1%"(+.%$ ="32 2%-*3$ =5*3

.%2+0(5*3 0"3 0"+$% #*$$ *1 =5-/*.("3( 1"0(*.$ *1 =8".5"(5*3 &="32 2"(" 531*.-"(5*3 #*$$

>.+% &

?",,53, .%2+0%$ 8".5"30% *1 -*2%#$ $530% =7% =".% "8%.",53, *8%. %9"-/#%$

?**$(53, .%2+0%$ 8".5"30% *1 =-*2%#$ 4%0"+$% =5( ("@%$ =7%5,)(%2 "8%.",% *1 =7%"@ #%".3%.$ &

!"#$% &

:$%. 3%%2$ =(* /.*/%.#; $%( );/%.

/"."-%(%.$ -"3+"##; (* "=."3,% =*. =$/%05150 8"#+%

"( =()% 4%,53353, 6

"32 ()% =-*2%# 0"3 0*-/".% 8"#52"(5*3 %..*.$ "+(*-"(50"##; (* $%#%0(

()% 4%$( );/%./"."-%(%. ,58%3=53 "=."3,% 2%153%2 4; +$%.$ &

A& ,&B=53 @<CC D

E53 -535 <4"(0) $(*0)"$(50 ,."25%3(=2%$0%3( &

CSC2515 Fall 2020 Midterm Test

(e) [2 points] Most machine learning algorithms, in general, can learn a model with

a Bayes optimal error rate.

(f) [2 points] The k-means algorithm is guaranteed to converge to the global mini-

mum

(g) [2 points] When optimizing a regression problem where we know that only some

of our features are useful, we should use L1regularization.

(h) [2 points] For a given binary classification problem, a linear SVM model and a

logistic regression model will converge to the same decision boundary.

2

!"#$% &

?";%$ */(5-"# %..*. ."(% 5$ =()% 4%$( 7% =%8%. )*/% (* 2* =75() "3; #%".353, "#,*.5()- 6

7)50) ;5%#2$ F%.* 45"$ ="32 8".5"30% &G".2#; "3; "#,*.5()- =0*8%.%2 53 ()5$ 0*+.$% "0)5%8%$ =()5$

&

!"#$% &

>)% =*4H%0(58% I#

J- K$ (. LM*1 @<-%"3$ 5$ =3*( 0*38%9 6

$* =0**.253"(% 2%$0%3( =*3 I5$

3*( ,+"."3(%%2 (* 0*38%.,% (* ()% ,#*4"# -535-+- &'#$* =()%.% 5$ =3*()53, (* /.%8%3(

B<-%"3$ =$(+0@ 53 #*0"# -535-" 53 ()% "#,*.5()- &

>.+% &

J1 ;*+ 4%#5%8% *3#; "$-"## 1."0(5*3 *1 =-"3; 1%"(+.%$ =5$ #5@%#; (* 4% .%#%8"3( 6

;*+ =+$% N6.%,+#".5F"(5*3 ()"( %30*+.",%$ =$-"## 7%5,)($ =(* 4% =%9"0(#; F%.* ="32 ,58%$ ;*+

$/".$% */(5-"# 7%5,)( 8%0(*.

!"#$% &>)%; ".% $5-5#". 4+( 3*( ()% $"-% &>)% "$-/(*50 4%)"85*. *1

N*,5$(50 <O.*$$ &A3(.*/; #*$$ =5$ =$5-5#". (* ()% G53,% #*$$ 53 EPQ 6

$* =()%; ".% $+,,%$(53, 8%.; $5-5#". 7%5,)($ 1.*- ,."25%3( 2%$0%3(

6

()+$ $5-5#". =2%05$5*3 4*+32".5%$ 64+( =3*( =()% $"-% &

R

!53% M

EPQ S73&/5&3,&A&05 <

=K;#T0+.4 6JUF(.#7##

K

#*$$

V

WN53%". O#"$$515%. 75() G53,% #*$$ "32 N" .%,+#".5F"(5*3 <

<= $ *R

N*,5$(50 X%,.%$$5*3

-53 !J

6

N50% OY &=(M("## ZJ>

F

CSC2515 Fall 2020 Midterm Test

2. Probability and Bayes Rule [14 points]

You have just purchased a two-sided die, which can come up either 1 or 2. You want

to use your crazy die in some betting games with friends later this evening, but first

you want to know the probability that it will roll a 1. You know know it came either

from factory 0 or factory 1, but not which.

Factory 0 produces dice that roll a 1 with probability 0. Factory 1 produces dice that

roll a 1 with probability 1. You believe initially that your die came from factory 1

with probability ⌘1.

(a) [2 points] Without seeing any rolls of this die, what would be your predicted

probability that it would roll at 1?

(b) [2 points] If we roll the die and observe the outcome, what can we infer about

where the coin was manufactured?

(c) [2 points] More concretely, let’s assume that:

•0= 1: dice from factory 0 always roll a 1

•1=0.5: dice from factory 1 are fair (roll a 1 with probability 0.5)

•⌘0=0.7: we think with probability 0.7 that this die came from factory 1

3

2%3*(% .*## "$ [0< \] &JL&D1"0(*.; "$ (% #* 6

JL

^.0 9V_M V/09

VJJA< = * M&

^.%(*

MU

/09 <_

J(V/ 6&

^.%( <

<

(M

V2* &O# <;6

M(]6`6

1"0(*.; "$ (% #* 6

JLD)"4 5$ 0*+3( 1*. 9HV"

2%3*(% .*## "$ [O<

R_6\R

&J%<

<4

?";%$

K

X+#% ^%(%

KMVa 6&

b9V\

J<]&

J31%. /*$(%.5*. S

/0(#9 MV

^Q&Q[b(M'/0(c&/09J(

Md*

6

/09 MbJ<

a

69&

<J]b]

/%( <

<JJ[ <

<9M'/%( K$ /0[VeGV T

W3*.-"#5F%

!"0(*.; (* 6

f/%("# 9<

<9Mg/0(<*#/O[<9(&(* M1# h

6

/%( K( J[ Ma6

h]

6

/0(%*#9 M

CSC2515 Fall 2020 Midterm Test

(d) [2 points] Now we roll it, and it comes up 1! What is your posterior distribution

on which factory it came from? What is your predictive distribution on the value

of the next roll?

(e) [2 points] You roll it again, and it comes up 1 again.

Now, what is your posterior distribution on which factory it came from? What is

your predictive distribution on the value of the next roll?

(f) [2 points] Instead, what if it rolls a 2 on the second roll?

(g) [2 points] In the general case (not using the numerical values we have been using)

prove that if you have two observations, and you use them to update your prior

in two steps (first conditioning on one observation and then conditioning on the

second), that no matter which order you do the updates in you will get the same

result.

4

i

/0(V##9V#

M'/0 9<

<

_b<_V_

M/%(5(

MV

]&je]&cV]<Rj

^O 1< =]bcV_ M'/59%# b(V*c/0(V*JV _e]&RV]<R

/0(<*#9(<**&5$k*&F$<<*&lmF/0[KVJ#9J<1/#<N#9M/#[KV##(J2(^O<NV

JJ[MV

n&jRo

V]&lm\e_<_]&jRoe]&j

]&R<_]&Rj

V

V]&cR_

2

^0( <l 6[6VN 6__\V_c '^0( <

<_M ^O[!JG

K( M/59 J( <<

__V]&ce]&je]&j<<]&_cj

/%( <

<*6[6Vb 6[F K( M"/0(<*$/091(#((*#/09F&(# <_<<]cV]&Re_e_ V= * <R

/0( <

<'9

MV>511( <]&Rmo /, 9K WJ9M<E/0(#95/09 K( J( M2(

/%( <l]_ec

V*,p <

<]&mR\ V=]&Rmoe]&j

<_]<mR\e_V]&o_m

%

5( 5$ 1.*- 1"0(*.; _& 4%0"+$% 250%

1.*- 1"0(*.; *3%8%. .*##$ \

&

^%(% ]_e_V]

1

^OA <__e_V_

/%( 69 6

&+"b/0(J9>/9FG>1g/0(R</095V95J(M&/09FV9;(

M

<

&/q[gV[5#( M

g

/%( M&/09"

<

<"1( M

_/0(9&/%kb

CSC2515 Fall 2020 Midterm Test

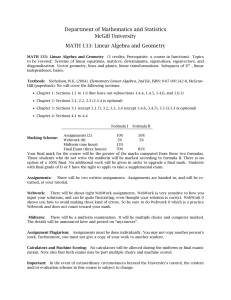

3. Decision Trees & Information Gain [10 points]

16,16

12,4

a, b ,

4,12

x, y ,

The figure above shows a decision tree. The numbers in each node represent the number

of examples in each class in that node. For example, in the root node, the numbers

16,16 represent 16 examples in class 0 and 16 examples in class 1. To save space,

we have not written the number of examples in two right leaf nodes as they can be

deduced based on the values in the left leaf nodes.

(a) [2 points] What is the information gain of the split made at the root node?

(b) 2points]What values of aand bwill give the smallest possible value of the

information gain at this split, and what is the value?

(c) [2 points] What values of xand ywill give the largest possible value of the

information gain at this split, and what is the value?

5

Jr J$/#5( MVGO` M<qJG+%(( M(5(#% =.5,)($ M

VJ<d9

1< 5( #*,$ k<1#*, 5<F0<51(*,&J> <!1 #*, &kJs

VJ<n&o_

J]&_t

J1 0$/#5($ VGO` M<GO`J[ MR] $530% GA` MuGO`J[ M

Jr J$/#5( M-53

Vn7)%3 G% Ka VGO`J[ MV=<51 7,F <h#*,*11

JJJ&

"(

vh0w46

WJA

S*.,

TT9*9* 6

4<<l

J'O $/#5( MVG%; M<GO`J[ M$GO` M7)%3 GO`J[ MVn

G0#%1($ =VG% .5,)( _V] WJ[;J *. 1; J

6

6

7

7

8

8

9

9

10

10

11

11

12

12

13

13

14

14

15

15

16

16

17

17

18

18

19

19

20

20

21

21

22

22

23

23

24

24

25

25

26

26

27

27

28

28

29

29

1

/

29

100%

![Probiolite Reviews: [Scam Or Legit] Update 2021 || Is It Really Work?](http://s1.studylibfr.com/store/data/010096955_1-6844df3a24d266a838e3ff88d79a995f-300x300.png)